When I joined Kubex last year, the company was already well aware of the growing power of Large Language Models. As a company focused on intelligent resource optimization for Kubernetes, GPUs, and cloud infrastructure, generative AI didn’t feel like a threat so much as a natural extension of where the industry was heading. Kubex had already invested heavily in machine learning, but it was becoming clear that foundation models could unlock an entirely new class of capabilities for our customers.

What wasn’t clear was how to do that well.

The potential was obvious enough that Kubex created a new role—Head of AI Engineering—to explore what leveraging LLMs could really mean for the business. But beyond that high-level conviction, there were more questions than answers. What problems should we even be trying to solve? How much of this new stack would we need to own ourselves? Were we expected to become experts in training, hosting, and fine-tuning models just to stay relevant?

That uncertainty landed squarely on my desk on day one. And I’ll be upfront: I was not, at that point, an expert in LLMs. What I did have was a strong sense that we didn’t need to compete with the foundation models themselves. They were already extraordinarily capable, and improving faster than any single company could hope to match. Our opportunity was different. We needed to teach these models about our product and about our customers and their infrastructure, and then use that shared understanding to solve real, practical problems.

And so, we built an MCP server. This is the story of why MCP was the right choice for us and why I’m so happy with the results.

But wait, isn’t MCP old news?

The Model Context Protocol (MCP) is a standardized way of connecting an LLM to tools and resources that it can use to get access to information and take actions. The coming of MCP really unlocked a lot of the value from LLMs burgeoning capacity for tool use, by providing a way for people to build any LLM tooling that they might need without having to lock themselves into a particular vendor. And so MCP exploded last year, hand in hand with the rise of agentic coding tools like Claude Code that could combine different tools in novel and interesting ways to solve a huge universe of problems.

It’s important to note, however, that the usefulness of MCP goes way beyond coding. Even as the hype has shifted to skills MCP retains key advantages that make it a basis for AI system integration. Most importantly, there’s a sophisticated story around authorization using OAuth 2.1 that allows MCP to provide secure, authenticated access to data while offering users a plug-and-play experience. Not to mention that your LLM doesn’t need a coding sandbox in order to make it work, making it suitable for lightweight agents in an AI control plane.

Our approach

A fundamental question that I’ve been asked a few times in the course of this project is: we have GraphQL, why not simply give the model access to that, instead of adding a new layer that is essentially a different kind of API server? And in fact the first version of our MCP server was little more than a pass-through, exposing a few tools that mapped precisely onto key GraphQL APIs. This gave us our first big successes and demonstrated the ingenuity that these models can deploy. But our MCP server isn’t like that anymore, and therein lies the answer to the question. The truth is that most conventional APIs aren’t ideal for use by LLMs. Think about all of the properties with misleading names, the endpoints that aren’t recommended for use anymore but are left in for backwards compatibility, the long chains of API calls that are necessary to get simple answers, the relationships in the data model that are well known to experts but aren’t described in the API definition itself. A good LLM will nonetheless attempt to sort through what you have and produce an answer. Is it the correct answer? Is it the most detailed, most useful answer? If it isn’t, how do you improve the quality of the answer?

By exposing a separate set of MCP tools on top of our internal API, it gives us a way to express exactly what we want the model to know. We can re-label properties so that they’re self-describing. We can eliminate those properties that are deprecated or for internal use only. We can reimagine our API surface so that the model can do common lookups using one tool call instead of four. And we can accompany each of our MCP tools with a detailed description that explains how and most importantly why the model should use each tool, including examples of using the tools to answer the kinds of questions we expect our users to be asking.

As an example, consider the problem of a Kubernetes pod experiencing out-of-memory (OOM) kills. Could a too-low memory be contributing to the problem? We found our LLM was eager to assume so. However, unlike memory limits that will inevitably lead to OOM if they are too low, a too-low request may contribute to OOM kills but only if the node as a whole is out of memory. Once we explained in our tool description how the model could use a different tool to get information on node memory saturation, and yet another tool to get historical resource usage trends, it naturally started to gather more information and present a nuanced analysis. It’s now common for us to see the model integrating data from three, four, or more tools to respond to a user with a deep and detailed answer.

The pay-off

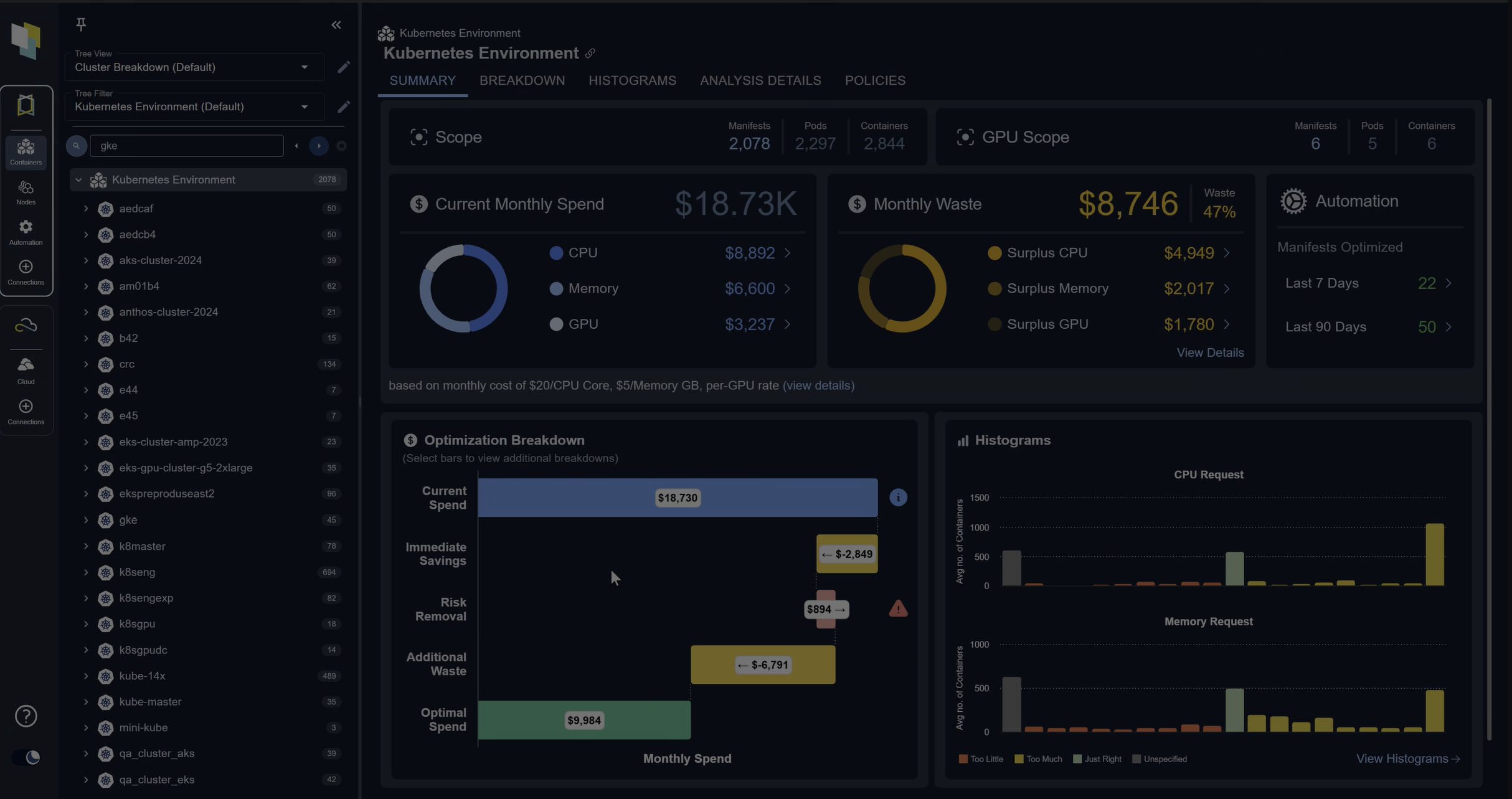

What do we get for having created such a potent package of actionable data and domain understanding? For one thing, you can have a conversation about your cluster:

As soon as we started playing around with this capability it was clear that this was an amazing complement to the traditional Kubex UI. Kubex has a wealth of data and a slick, configurable UI but sometimes it’s simply easier to express what you want in words. An LLM can also find different bits of data that might not live near each other in the UI but which when put together offer a valuable and non-obvious insight. Then it can suggest a plan of action for you to take in response.

Of course, chat is not the only possible use case and may ultimately not be the most important. We’re now building an AI platform architecture that incorporates our set of MCP tools into a variety of purpose-built agents that can undertake research projects through the state and history of your system and turn the resulting insights into action. Complex challenges like bin packing, predictive scaling and HPA optimization are ideal candidates for being solved by LLMs, and the first step is to arm the LLM with access to data and analytics.

Conclusion

Direct use of our MCP server is an ideal solution for a certain kind of customer, one who wants to take control of their LLM experience and keep control of their data. Customers who have the infrastructure to plug in MCP servers into their environment, or who are building that infrastructure now. We’ve been pleased to see some of our largest and most mature customers able to bring their Kubex data into their production AI systems with minimal friction.

One thing became very clear right away, however: many of our customers can’t or don’t want to go through the process of assembling all of the AI infrastructure components, but they’re still looking for the flexibility and power of being able to converse with an LLM about their data. We needed a “batteries included” version that could be used with zero friction by any user of our product. I’ll write more about how we did that in the future.

Postscript: If you’re looking for practical advice on how to make your MCP server more effective, I can recommend this talk by Jeremiah Lowin, and this article from the Block Engineering Blog.